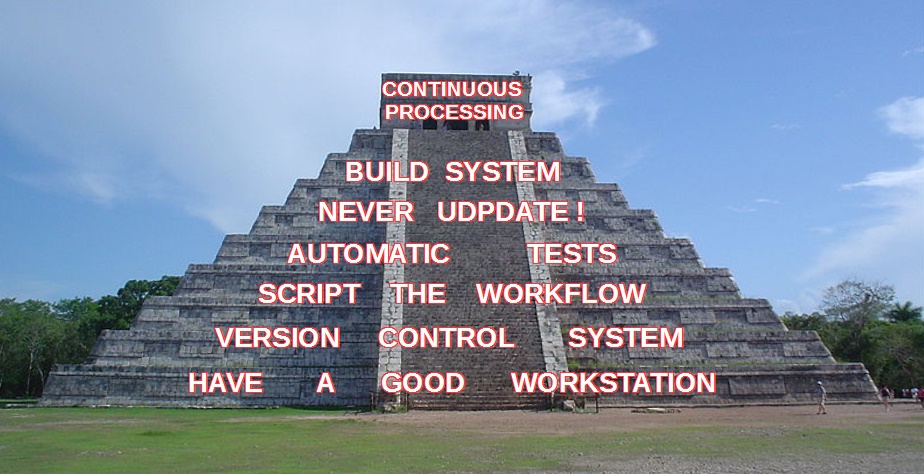

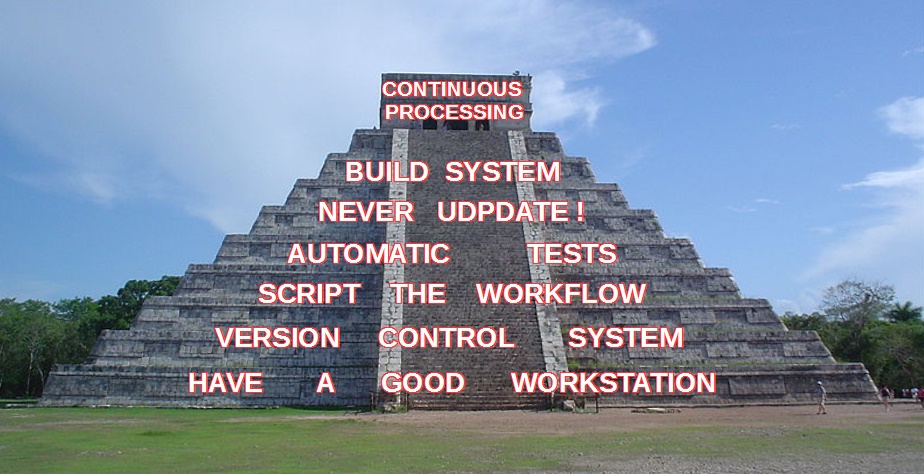

Maturity of teams working on data

The way towards continuous processing

- Everyone in the team must have a good workstation

- Use version control

- Script the whole workflow from scratch

- Tests should be automatic too

- Don't update data, augment it !

- Use a build system for your data

- Continuous processing is like refill

Agenda

Everyone in the team must have a good workstation

- a good internet connection

- a lot of RAM and (fast) disk

- a scriptable command line

- ... for every team member, even testers !

Would you repair this ?

in here ?

Use version control

- eg : git

- even for a hackathon !

- commit your doc

Script the whole workflow from scratch

How many city per departement ?

.mode tabs

.import comsimp2015.txt city

.import depts2015.txt departement

CREATE INDEX idx_city on city (DEP, COM);

CREATE INDEX idx_dept on departement (DEP);

.mode csv

.output result.csv

SELECT departement.DEP, departement.NCCENR AS DEP_NAME, COUNT(*)

FROM departement

JOIN city ON city.DEP = departement.DEP

GROUP BY departement.DEP, departement.NCCENR;

How many city per departement ?

What will happen if there is an error in the second index ?

set -e

wget http://www.insee.fr/fr/methodes/nomenclatures/cog/telechargement/2015/txt/depts2015.txt

wget http://www.insee.fr/fr/methodes/nomenclatures/cog/telechargement/2015/txt/comsimp2015.zip

unzip comsimp2015.zip

sqlite3 cities.sqlite <<$script$

.headers ON

.mode tabs

.import comsimp2015.txt city

.import depts2015.txt departement

CREATE INDEX idx_city on city (DEP, COM);

CREATE INDEX idx_dept on city (DEP); -- <-- error here

.mode csv

.output result.csv

SELECT departement.DEP, departement.NCCENR AS DEP_NAME, COUNT(*)

FROM departement

JOIN city ON city.DEP = departement.DEP

GROUP BY departement.DEP, departement.NCCENR;

$script$

How to deal with errors ?

-

3 ways to re-run what have failed :

- Comment the sources

- Reset everything before you start

- Run each step under condition

Comment the part that have already run - DON'T !

set -e

# wget http://www.insee.fr/fr/methodes/nomenclatures/cog/telechargement/2015/txt/depts2015.txt

# wget http://www.insee.fr/fr/methodes/nomenclatures/cog/telechargement/2015/txt/comsimp2015.zip

# unzip comsimp2015.zip

sqlite3 cities.sqlite <<$script$

.headers ON

.mode tabs

-- .import comsimp2015.txt city

-- .import depts2015.txt departement

-- CREATE INDEX idx_city on city (DEP, COM);

CREATE INDEX idx_dept on departement (DEP);

.mode csv

.output result.csv

SELECT departement.DEP, departement.NCCENR AS DEP_NAME, COUNT(*)

FROM departement

JOIN city ON city.DEP = departement.DEP

GROUP BY departement.DEP, departement.NCCENR;

$script$

Reset everything before you start - Painfull

rm cities.sqlite

rm depts2015.txt

rm comsimp2015.zip

set -e

wget http://www.insee.fr/fr/methodes/nomenclatures/cog/telechargement/2015/txt/depts2015.txt

wget http://www.insee.fr/fr/methodes/nomenclatures/cog/telechargement/2015/txt/comsimp2015.zip

unzip comsimp2015.zip

sqlite3 cities.sqlite <<$script$

.headers ON

.mode tabs

.import comsimp2015.txt city

.import depts2015.txt departement

CREATE INDEX idx_city on city (DEP, COM);

CREATE INDEX idx_dept on departement (DEP);

.mode csv

.output result.csv

SELECT departement.DEP, departement.NCCENR AS DEP_NAME, COUNT(*)

FROM departement

JOIN city ON city.DEP = departement.DEP

GROUP BY departement.DEP, departement.NCCENR;

$script$

Conditional execution

set -e

if [ ! -e depts2015.txt ]; then

curl -O "http://www.insee.fr/fr/methodes/nomenclatures/cog/telechargement/2015/txt/depts2015.txt"

fi

if [ ! -f comsimp2015.zip ]; then

curl -O "http://www.insee.fr/fr/methodes/nomenclatures/cog/telechargement/2015/txt/comsimp2015.zip"

fi

if [ ! -f comsimp2015.txt ]; then

unzip comsimp2015.zip

fi

if ! echo "SELECT * FROM city LIMIT 0;" | sqlite3 cities.sqlite 2> /dev/null ; then

sqlite3 cities.sqlite <<$script$

.mode tabs

.import comsimp2015.txt city

CREATE INDEX idx_city on city (DEP, COM);

$script$

fi

if ! echo "SELECT * FROM departement LIMIT 0;" | sqlite3 cities.sqlite 2> /dev/null ; then

sqlite3 cities.sqlite <<$script$

.mode tabs

.import depts2015.txt departement

CREATE INDEX idx_dept on departement (DEP);

$script$

fi

if [ ! -f result.csv ]; then

sqlite3 cities.sqlite <<$script$

.headers ON

.mode csv

.output result.csv

SELECT departement.DEP, departement.NCCENR AS DEP_NAME, COUNT(*)

FROM departement

JOIN city ON city.DEP = departement.DEP

GROUP BY departement.DEP, departement.NCCENR;

$script$

fi

Forces to structure the code around chunks of data

Tests should be automatic too

- test existence

- test quantities

- test integration

- be creative !

Example : test quantities (and existance)

if ! echo "SELECT * FROM departement LIMIT 0;" | sqlite3 cities.sqlite 2> /dev/null ; then

sqlite3 cities.sqlite <<$script$

.mode tabs

.import depts2015.txt departement

CREATE INDEX idx_dept on departement (DEP);

$script$

NB_DEPS=`echo "SELECT COUNT(*) FROM departement;" | sqlite3 cities.sqlite`

if [ $NB_DEPS -lt 100 ] ; then

echo "More than 100 departement expected but $NB_DEPS found"

exit 1

fi

fi

Don't update data, augment it !

- Example with files and SQL

- How much disk is worth an hour of your time ?

It's worth duplicating some data if you get rollback

Example : INSEE code for each city

ALTER TABLE city ADD COLUMN INSEE;

UPDATE city SET INSEE = DEP || COM;

CREATE TABLE city_insee AS

SELECT *, DEP || COM AS INSEE FROM city;

How much space is worth your time ?

A developer cost at least 200 € a day

A 240 GB SSD costs 60 €

1h <==> 100 GB !

You can spend a lot of space to same time... Don't hesitate to duplicate data if you trade it with rollback !

Use a build system for your data

- collaboration gets automatic

- eg : make, Drake, ex-continuous-processing or tuttle

- focus on tuttle

Continuous processing

- Update the data as soon as a team member commits a change in code

- Update the data when source data changes

- Can be operated by tools like Jenkins

It's is like your phone battery !

- The normal state is when every process is complete

- Reprocess what have changed as soon as you can

- You should never have to reprocess all... Unless there is a major issue

- It will have an impact on how you structure your project

Conclusion : Improve continuously

- software engineering is a good source of inspiration...

- ... like agile methods

- retrospectives helps your team find your its ways of improvement